(Image Source: Apple)

On Monday, June 10, 2025, at the opening keynote of WWDC, Apple officially unveiled Liquid Glass, its next-generation design language. Introduced by CEO Tim Cook and design chief Alan Dye, the reveal took center stage at the start of the event, setting the tone for what Apple called a “new chapter in interface design.”

Presented with cinematic visuals and ambient soundscapes, the announcement drew immediate attention from developers, designers, and tech enthusiasts worldwide. While Apple saved in-depth demonstrations for later sessions, the debut of Liquid Glass stood out as one of the most talked-about moments of the conference.

What Is Liquid Glass?

(Image Source: Apple)

Liquid Glass is Apple’s bold new design system that blends transparency, fluidity, and dynamic light interactions to create interfaces that appear to float and respond to context. It draws inspiration from physical materials, most notably, glass, infused with a digital fluidity that gives depth and movement to everyday UI elements. While reminiscent of the translucency introduced in iOS 7 and the dimensionality of macOS Aqua, Liquid Glass takes these concepts further, creating an interface that feels alive and reactive. Let’s cover some of its features.

Design Philosophy and Visual Language

(Image Source: Apple)

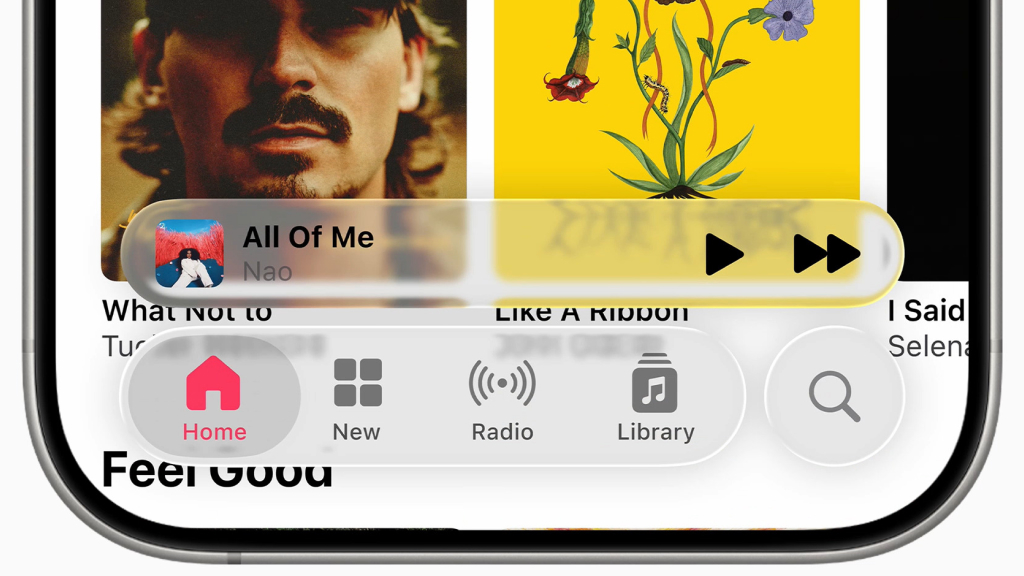

The core visual aesthetic of Liquid Glass revolves around clarity, elevation, and light play. UI components such as menus, toolbars, and widgets appear suspended in space, subtly warping and reflecting the content behind and around them. Drawing from the natural qualities of glass, Liquid Glass introduces fluid transparency, dimensional layering, and adaptive motion to the interface.

A defining feature is the “lensing effect,” which distorts and magnifies background content as users interact with UI elements, offering a sense of depth and responsiveness. This effect gently directs the user’s attention, improving usability in addition to adding visual flair. Shadows, highlights, and color gradients dynamically shift in response to content and environmental cues, like ambient light or device movement.

Integration Across Apple Platforms

(Image Source: Apple)

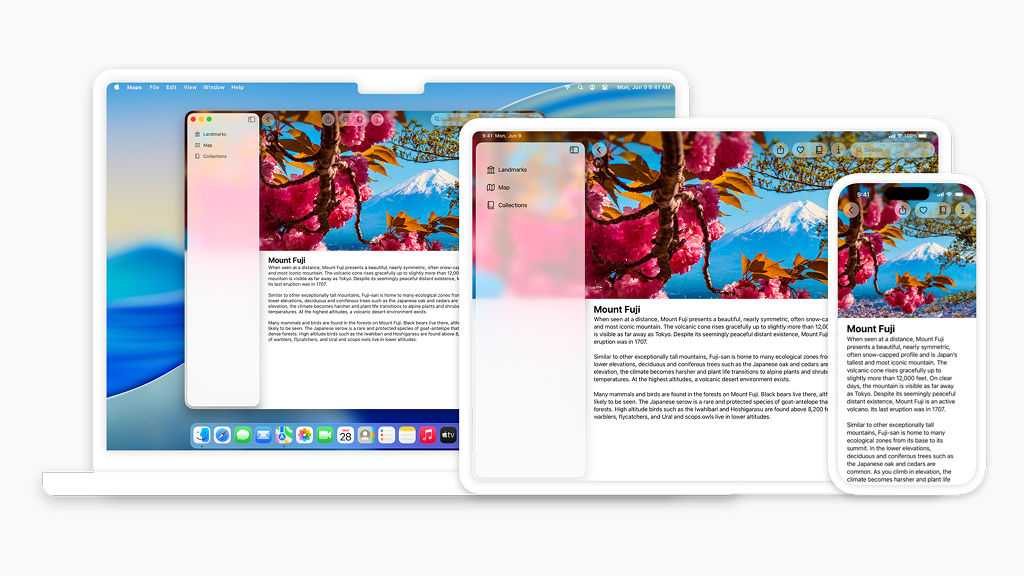

Liquid Glass isn’t confined to one product, it marks a unified design evolution across Apple’s entire ecosystem. Debuting with iOS 26, iPadOS 26, macOS Tahoe, watchOS 12, tvOS 18, and visionOS, this design language brings a coherent visual identity that seamlessly spans devices of all shapes, sizes, and input methods. Whether users are tapping on an iPhone, navigating with a Mac trackpad, rotating the Digital Crown on an Apple Watch, or interacting with content in spatial environments using Vision Pro, the design responds with consistent behavior and fluid transitions.

This cross-platform integration ensures that core UI elements like panels, widgets, notifications, and toolbars share a common visual grammar, floating, refracting, and adapting in similar ways. It enhances continuity, allowing users to intuitively carry their interaction habits from one device to another.

Liquid Glass also strengthens Apple’s broader ecosystem vision, bridging the gap between 2D and spatial interfaces. The design naturally connects with visionOS, signaling Apple’s long-term commitment to spatial computing. For developers, this means one design language to rule them all, backed by updated APIs that support platform-specific nuances while maintaining a unified aesthetic.

Developer Tools and APIs

(Image Source: Apple)

To help developers embrace the new Liquid Glass design language, Apple introduced a suite of updated tools and APIs across SwiftUI, UIKit, and AppKit. These enhancements allow app creators to integrate fluid translucency, depth, and light-responsive elements with minimal effort, while maintaining performance and accessibility. Developers can now apply Liquid Glass materials, such as dynamic blurs, adaptive color overlays, and responsive shadows, directly to interface components, ensuring their apps feel consistent with Apple’s new visual direction.

A key addition is the redesigned Icon Composer, which allows developers to craft icons that adapt seamlessly to light and dark modes while harmonizing with the layered, luminous aesthetic of Liquid Glass. These icons are no longer static assets but dynamic, scalable visuals that respond to system context.

Apple also updated its Human Interface Guidelines (HIG), offering best practices on integrating Liquid Glass without sacrificing usability or performance. Developers are encouraged to maintain visual clarity, consider real-time responsiveness, and build with accessibility in mind.

The tools are designed not only for aesthetics but also for functionality, ensuring apps remain intuitive while visually aligned with Apple’s broader spatial computing vision. With these APIs, Apple empowers developers to create interfaces that are not just beautiful, but deeply immersive and future-ready.

Redesign of Core Apple Apps

(Image Source: Apple)

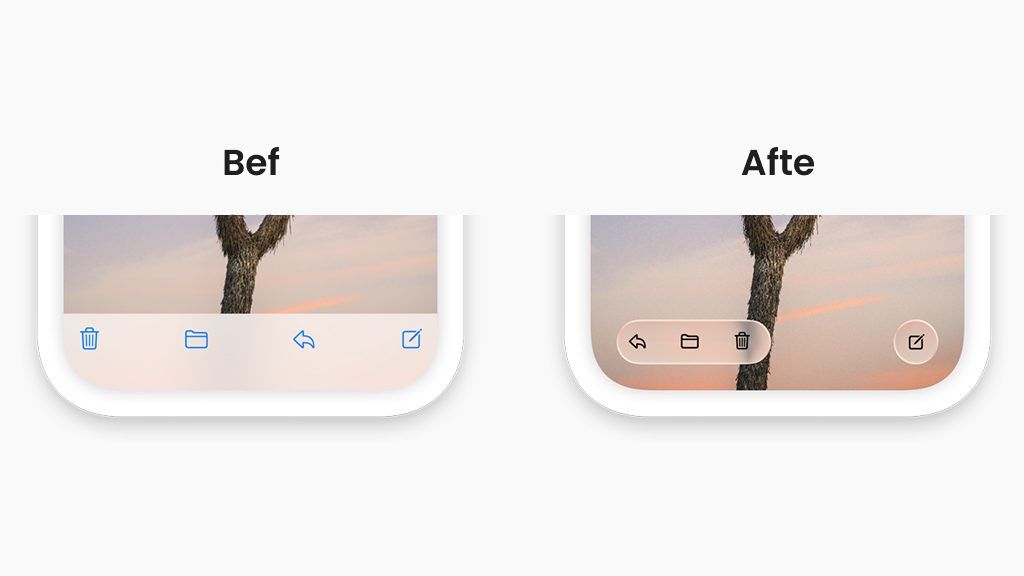

With the introduction of Liquid Glass, Apple has reimagined many of its core apps to showcase the full potential of its new design language. Built-in apps like Messages, Safari, Maps, and Camera now feature interfaces that feel dynamic, elevated, and more immersive than ever before. Each app has been thoughtfully redesigned to embrace fluid layering, translucency, and responsive lighting.

In Messages, conversation bubbles appear to float within glass-like panels that subtly respond to scrolling and device movement, giving a sense of depth and motion. With its shimmering tab bars and more fluid browsing experience, Safari offers toolbars that subtly fade away and then return in response to user activity. Directions and location cards in maps are now displayed on top of layered backdrops, which improves clarity and gives the image a spatial, nearly augmented reality feel.

Even the minimalist Camera app has received subtle enhancements, with overlays and controls that adapt their appearance based on lighting and context. The Phone app uses gentle motion and blur effects to distinguish call screens, with background colors and depth cues shifting dynamically based on contact photos or focus modes.

Interactivity and Dynamic Behavior

(Image Source: Apple)

What sets Liquid Glass apart is its responsiveness to real-world context. Elements shift and react based on lighting, movement, and app behavior. For instance, a toolbar might slightly elevate or change translucency when the device is tilted, giving a sense of realism and interactivity.

These dynamic elements are powered by on-device intelligence, allowing interfaces to adapt in real time without relying on the cloud. Motion sensors, ambient light data, and user gestures all influence how elements behave. This creates a user experience that feels personalized, intuitive, and immersive.

Availability and How to Try It

(Image Source: Apple)

Developers can begin working with Liquid Glass today by downloading the latest beta versions of iOS 26, macOS Tahoe, and other updated platforms. Public beta releases are set to roll out in July 2025, with the full stable launch expected this fall.

Compatible devices include:

- iPhone 15 and later

- M1 Macs and newer

- iPad Pro (M1) and newer

- Apple Watch Series 9 and later

- Apple TV 4K (2022 and newer)

- Vision Pro

Those eager to try it can explore demo apps and redesigned system apps in the betas to experience Liquid Glass firsthand.

Public Reaction and Critical Reception

(Image Source: Apple)

The unveiling of Liquid Glass at WWDC 2025 sparked widespread discussion across the tech community. Many designers and developers praised its bold, immersive aesthetic, calling it Apple’s most visually striking interface since the introduction of iOS 7. Tech reviewers highlighted its cinematic qualities and seamless integration across devices, viewing it as a significant step toward spatial computing.

The overall consensus is that Liquid Glass represents a daring new chapter in Apple’s design philosophy, one that prioritizes depth, dynamism, and continuity. It may not be perfect yet, but it sets the stage for Apple’s long-term vision of immersive, intelligent interfaces.

Apple’s Liquid Glass is more than a visual update, it’s a statement of intent. By introducing a design system that reacts, responds, and reflects, Apple is setting the stage for a future shaped by spatial computing, AI, and immersive environments. While it may take time for developers and users to fully adapt, the foundation has been laid for the next decade of digital experience. As Apple continues to blur the lines between software and reality, Liquid Glass stands as a bold and beautiful beginning.