For much of the last half-decade, “Dojo” was one of Tesla’s most ambitious, and most mystifying bets. Promised as a homegrown, exascale AI training cluster that would process the video torrent from Tesla’s fleet and unlock fully autonomous driving, Dojo grew from a swaggering tech demo into a multi-year engineering project that showcased both the audacity and limits of in-house silicon and supercomputing at one of the world’s most visible tech-manufacturing companies.

By mid-2025, however, the project’s trajectory had dramatically shifted, teams were disbanded, engineers reassigned, and the company moved toward relying on external partners. The story of Dojo is a lesson in technical breakthroughs, bold promises, strategic missteps, and the tough economics of building large-scale AI systems.

Turning Ideas Into Custom Chips

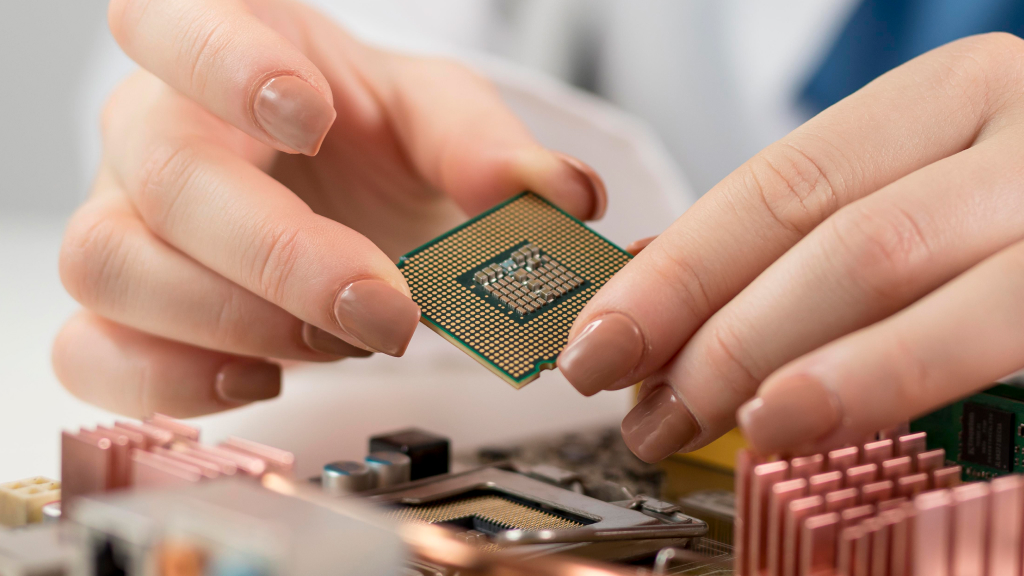

Dojo began as a straight answer to a simple problem; Tesla’s full self-driving (FSD) stack needed huge amounts of video data to train vision models, and off-the-shelf data-center GPUs were expensive and optimized for different workloads. Tesla’s solution was to design its own chip (the D1), interconnect, and systems, a vertically integrated stack that, if it worked, promised better price-performance for the kinds of large, video-heavy models Tesla wanted to train.

The D1 chip and the Dojo architecture were presented as a purpose-built alternative to GPU clusters, featuring many small cores optimized for tensor and video workloads, a dense on-chip memory design, and a custom networking fabric to reduce latency and enhance scaling.

Early demos and disclosures emphasized scale. Tesla described Dojo ExaPODs that could, in theory, reach exaflop-level compute in certain low-precision formats and showed racks and cabinets intended to stack tiles tightly for minimal communication overhead. The pitch, build your own chips, tune them to your data, and you’ll beat general-purpose GPUs on cost and throughput, resonated with engineers and investors who had watched the cloud GPU market balloon in price.

Early Progress and Confident Announcements

When Dojo made its public debut, Tesla engineers shared striking anecdotes, including claims that early test systems were so powerful they tripped local power infrastructure, a vivid illustration of the system’s immense energy demands and density. The company also showcased the design sophistication of the D1 and talked up plans to expand Dojo to handle all of Tesla’s training needs.

But building a custom supercomputer is different from building a prototype. Scaling a chip design into high-yield silicon, building the cooling and power infrastructure for dense racks, writing and optimizing compilers and frameworks, and proving that a proprietary architecture can reliably accelerate production training workloads are huge, expensive undertakings.

Market and Strategic Pressures

Tesla’s retreat from the Dojo-first strategy did not happen in a vacuum. The competitive landscape for AI hardened where hyperscalers and AI startups poured money into GPU and accelerator farms, and vendors optimized hardware and software stacks for large language models and vision models alike. For companies without the scale of a cloud provider, maintaining a custom silicon roadmap that competes on cost, performance, and software ecosystem becomes an uphill battle.

The costs of wafer sourcing, yield optimization, and rapid chip iteration tend to favor companies that can spread billions in R&D across large-scale operations. For Tesla, balancing automotive capital needs with simultaneous investments in robotics and vehicle production made sustaining Dojo as a standalone project increasingly difficult.

Dojo wasn’t a total failure, as it produced real engineering advances, a custom ML-oriented ISA, lessons on scaling dense mesh networks and on-chip memory hierarchies, and prototypes that tested new approaches to training video models. The program also highlighted areas where Tesla’s strengths, tight integration between vehicles and software, clash with the scale and long time horizons required for custom silicon.

Challenges and Setbacks

While Dojo promised groundbreaking performance, the project faced numerous hurdles that highlighted the complexity of building a custom AI supercomputer. Manufacturing and chip yields proved to be a significant challenge. Scaling the D1 chip from prototype to mass production required overcoming defects, optimizing fabrication processes, and ensuring consistent performance, a difficult task even for a company with Tesla’s resources.

Software and system optimization added another layer of difficulty. Tesla needed to develop and fine-tune compilers, training frameworks, and orchestration tools specifically for Dojo’s architecture. Ensuring that thousands of chips could communicate efficiently across tiles, cabinets, and ExaPODs while reliably training massive video datasets pushed both hardware and software teams to their limits.

The project also faced human and organizational challenges. Key engineers left Dojo to pursue opportunities elsewhere, and remaining staff were often reassigned to other Tesla initiatives, such as vehicle AI systems or robotics. This turnover slowed development and added uncertainty about the program’s long-term viability.

Finally, Dojo was affected by broader strategic pressures. Tesla had to balance its ambitious AI efforts with the operational demands of vehicle production, energy products, and other high-profile projects. Combined, these technical, organizational, and strategic factors ultimately limited Dojo’s ability to scale as initially envisioned, forcing the company to rethink its approach and rely more heavily on external AI hardware providers.

What It Means for Tesla and the Industry

For Tesla, winding down Dojo as a flagship standalone project marks a pragmatic change in direction. By reallocating talent and leaning on external suppliers, the company can access mature toolchains and rapid hardware improvements without bearing full chip-cycle risk. For the industry, Dojo’s arc is a cautionary tale that ambitious in-house silicon programs can deliver differentiated advantages, but only if the company committing to them can sustain the cost, time, and operational complexity.

Some observers call Dojo’s disbanding a failure, others describe it as a strategic pivot, one that acknowledges the practical tradeoffs between bespoke hardware and time-to-market. Whichever label fits best, Dojo’s rise and fall offers a compact case study in modern AI engineering, the technical promise of custom accelerators, and the stark commercial realities that ultimately decide whether prototypes become platforms.

For Tesla, the Dojo saga will likely live on in the company’s engineering playbooks, useful lessons about what to build internally, what to buy, and when to change course.